Gradient Descent in Machine Learning: Backbone of Financial Equity Markets

Introduction to Gradient Descent

Welcome to exploring the heart of machine learning algorithms and their influential role in shaping the future of financial markets. Today's focus lies on a cornerstone of machine learning, the Gradient Descent algorithm, and its profound impact on the Indian financial equity markets. This extensive guide will navigate through the complexities of Gradient Descent, its real-world applications, limitations, and integral connections with the Decision Tree algorithm in machine learning.

Decoding Gradient Descent

A pivotal question stands before us: "What is Gradient Descent in Machine Learning?"

At its core, Gradient Descent forms an iterative optimization algorithm designed to find the minimum of a function. Its primary purpose involves identifying the optimal parameters for a model that minimizes the error or, in more formal terms, minimizes the cost function.

Consider a vast, mountainous landscape filled with diverse peaks and valleys. The objective of Gradient Descent involves finding the deepest valley without exploring the entire landscape. It initiates its journey at a point, computes the steepest path downhill, and takes a determined step in that direction. This process repeats until the algorithm arrives at a point where all paths ascend, indicating a local minimum.

The Power of Gradient Descent in Portfolio Optimization

With a grasp on the concept of Gradient Descent, let's delve into its practical applications. The Indian Financial Equity Market, characterized by its vast, dynamic nature, offers a perfect platform for applying Gradient Descent.

Portfolio optimization, a critical aspect of financial planning, stands as an exemplary application. Here, the goal lies in minimizing risk for a given level of expected return by strategically choosing the proportions of various assets. While the task seems straightforward at first glance, the complexity increases with the number of assets.

Here, Gradient Descent enters the scene. It iteratively adjusts the weights of assets in a portfolio to identify the combination that minimizes risk. Applying the "Gradient Descent algorithm in machine learning" enables investors to construct a portfolio that maximizes return for a given level of risk. This approach brings a level of sophistication and precision to financial planning, providing investors with a data-driven strategy for investment.

Understanding the Limitations of Gradient Descent

Despite its numerous advantages, Gradient Descent isn't without limitations. This realization serves as a reminder that even the most powerful tools in machine learning have their own constraints.

One of the primary limitations of Gradient Descent involves its tendency to get trapped in local minima for non-convex problems, leading to sub-optimal solutions. Consider a landscape with multiple valleys. Gradient Descent might settle in the nearest valley, mistaking it for the deepest, thereby missing the global minimum.

Another essential aspect to consider involves the sensitivity of Gradient Descent to the initial point and learning rate. The algorithm's performance depends heavily on these parameters. A learning rate that is too small can result in slow convergence, while a too-large rate might cause the algorithm to overshoot the minimum and diverge.

These challenges lead to the "Stochastic Gradient Descent vs Gradient Descent" discussion. Stochastic Gradient Descent, an alternative to the traditional Gradient Descent, updates the parameters for each training example individually, which can help avoid local minima for non-convex problems and often leads to faster convergence.

An Intuitive Example of Gradient Descent

With an understanding of Gradient Descent's theory and practical applications, let's explore its workings through a simple, relatable example. This analogy brings "Gradient Descent in deep learning" to a more accessible context.

Imagine a blindfolded person standing on hilly terrain to find the lowest point. The person can feel the slope under his feet and move toward the steepest descent. This process continues until he reaches a point where all surrounding areas are uphill. This scenario mirrors the process of Gradient Descent in machine learning!

Connecting Gradient Descent and Decision Trees

Now, let's draw connections with another vital tool in Machine Learning - the Decision Tree algorithm. By understanding "what is a decision tree in machine learning", we can further illuminate the broader picture.

A Decision Tree forms a flowchart-like structure where each internal node represents a feature, each branch represents a decision rule, and each leaf node represents an outcome. It offers a practical, transparent method for decision-making and prediction.

The Decision Tree algorithm in Machine Learning and Gradient Descent share a common goal: to minimize a cost function. In the context of Decision Trees, this could be the Gini impurity or entropy, which measures the disorder of the data. The algorithm makes decisions by creating branches that result in the greatest decrease in impurity, similar to how Gradient Descent takes steps that result in the greatest decrease in the cost function.

The application of Decision Trees in financial markets involves areas like credit scoring and algorithmic trading. It helps make data-driven investment decisions, thereby playing a crucial role in the dynamic Indian Financial Equity Markets.

import numpy as n

import matplotlib.pyplot as plt

# Cost Function

def compute_cost(y, y_pred):

n = len(y)

cost = (1 / (2 * n)) * np.sum((y_pred - y) ** 2)

return cost

# Gradient Descent Algorithm

def gradient_descent(X, y, learning_rate=0.01, iterations=1000, threshold=0.0001):

n = len(y)

cost_history = np.zeros(iterations)

w, b = np.random.rand(2) # Initialize weight and bias

for iteration in range(iterations):

y_pred = w * X + b

cost = compute_cost(y, y_pred)

cost_history[iteration] = cost

dw = -(1 / n) * np.sum(X * (y - y_pred)) # derivative w.r.t w

db = -(1 / n) * np.sum(y - y_pred) # derivative w.r.t b

w = w - learning_rate * dw # update w

b = b - learning_rate * db # update b

if iteration > 0 and abs(cost_history[iteration] - cost_history[iteration - 1]) <= threshold:

break

return w, b, cost_history

# Main Function

def main():

# Initialize linearly related random data

np.random.seed(0)

X = np.random.rand(100)

y = 2 * X + np.random.normal(0, 0.1, 100)

# Apply Gradient Descent

w, b, cost_history = gradient_descent(X, y, learning_rate=0.05, iterations=1000)

# Plot Cost function

plt.figure(figsize=(10, 6))

plt.plot(range(len(cost_history)), cost_history)

plt.xlabel('Iteration')

plt.ylabel('Cost')

plt.title('Cost function approaching local minima')

plt.show()

# Plot the best fit line

plt.figure(figsize=(10, 6))

plt.scatter(X, y, color='blue')

plt.plot(X, w*X + b, color='red')

plt.xlabel('Independent variable')

plt.ylabel('Dependent variable')

plt.title('Best fit line using Gradient Descent')

plt.show()

print(f'Optimal parameters are: w = {w}, b = {b}')

main()

Output:

The output includes the optimal parameters for the best-fit line, i.e., the weight (w) and bias (b), which are approximately 1.40 and 0.32, respectively.

Optimal parameters are: w = 1.4037211586965193, b = 0.32119782600566504

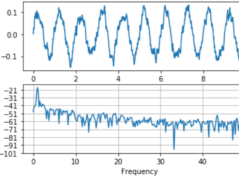

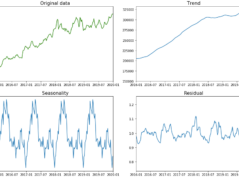

- The first plot displays how the cost function approaches the local minimum over iterations. As visible, the cost decreases rapidly initially and then slows down, eventually reaching a plateau, indicating that the algorithm has found the minimum cost.

- The second plot shows the best-fit line obtained using Gradient Descent on the data. The blue dots represent the actual data points, and the red line represents the best-fit line. The line fits the data well, demonstrating the effectiveness of the Gradient Descent algorithm.

This example showcases the power of the Gradient Descent algorithm in finding the optimal parameters for a model, which in this case, is a simple linear model. However, the algorithm's true potential lies in optimising more complex models used in various fields, including financial markets.

Conclusion

Gradient Descent, an unsung hero in the realm of Machine Learning, holds immense potential in optimizing complex problems. Its application in financial markets, particularly in portfolio optimization, makes it a pivotal tool for financial decision-making. Despite its limitations, it can lead to highly accurate predictive models with appropriate fine-tuning.

The synergy between Gradient Descent and Decision Trees further enhances the robustness of Machine Learning algorithms, paving the way for data-driven, optimized solutions in the financial domain. As we continue to unravel the intricacies of the Indian Financial Equity Markets, these algorithms serve as invaluable guides in our exploration.

The world of Machine Learning, with its algorithms and applications in various sectors, invites you to delve deeper. Harness the power of tools like Gradient Descent and Decision Trees to unlock new insights in the ever-evolving financial markets. Empower yourself with knowledge, and embark on this exciting journey into the future of finance. The world of financial markets awaits your expertise!

Follow Quantace Research

-------------

Why Should I Do Alpha Investing with Quantace Tiny Titans?

1) Since Apr 2021, Our premier basket product has delivered +44.7% Absolute Returns vs the Smallcap Benchmark Index return of +7.7%. So, we added a 37% Alpha.

2) Our Sharpe Ratio is at 1.4.

3) Our Annualised Risk is 20.1% vs Benchmark's 20.4%. So, a Better ROI at less risk.

4) It has generated Alpha in the challenging market phase.

5) It has a good consistency and costs 6000 INR for 6 Months.

-------------

Disclaimer: Investments in securities market are subject to market risks. Read all the related documents carefully before investing. Registration granted by SEBI and certification from NISM in no way guarantee performance of the intermediary or provide any assurance of returns to investors.

-------------

#future #machinelearning #research #investments #markets #investing #like #investment #assurance #management #finance #trading #riskmanagement #success #development #strategy #illustration #assurance #strategy #mathematics #algorithms #machinelearning #ai #algotrading #data #financialmarkets #quantitativeanalysis #money